There’s a rather disturbing trend that I’ve noticed in recent years – sites take forever to load. It’s a little distressing if only because our internet connections are getting faster, but on average (anecdotally) page speed metrics haven’t changed much at all.

One would assume that faster internet would speed things up, but it hasn’t panned out that way for a specific reason: site payloads are getting larger.

- Faster internet → faster load

- Larger payload → slower load

I’m not alone in thinking this, and this has been an ongoing concern for many years already, judging by ex-Googler Marissa Mayer’s revelation that even a half second delay in page load caused a 20% drop in traffic, or the claim that for every 100ms delay, Amazon saw a 1% decrease in sales.

More recent studies have come out, demonstrating that milliseconds are critical – even a 100ms delay can hurt conversion rates by 7%.

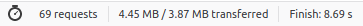

Even taking these numbers with a grain of salt, the conclusion is inescapable — page speed matters. In the world of instant gratification, how are we satisfied with the state of the web? I took a look at some common culprits, and measured how long it took for the site to load from start to finish1, including all of the ads/trackers/media.

Facebook Messenger (/messages)

Twitter

New York Times

Washington Post

Gmail

oof.

Compared to some objectively quicker sites:

Okay, this last one was a bit self-serving, but it goes to show that while HN and Prog21 are not web apps, NodeBB is, and can keep up with non-web apps just the same.

Why?

In general:

- The more features a site has, the larger the server-side or client-side payload.

- The more analytics or trackers a site has, the larger the payload.

- The people responsible for page speed (the developers) often develop on local environments, which do not suffer from common network constraints (congestion, packet loss, etc.). Programmers also tend to have access to better (read: faster, more reliable) internet connections, and so in essence, they are blind to the reality of their app’s speed

… but are these the only reasons behind slow page loads?

Over time, ever-increasingly faster internet mitigates these issues, but opens the door to shoving more data down the larger pipe, something we’ve been abusing for far too long. There’s even a term for this — induced demand. Just like how adding more lanes to a road doesn’t reduce traffic (but instead, incentivises people to drive, leading to the same traffic level), the temptation to send more data to the end user is tempered only by the internet connection speed of the average user. You may have heard a tongue-in-cheek variant of this: that work expands to fill the available time!

All those reasons aside, however, I think a significant but not-often-talked-about reason is the use of frameworks, and the bloat that accompanies it. What I say below is controversial at best, given the popularity of frameworks industry-wide, but it is what I (and the NodeBB team) believe in, and I believe our avoidance of frameworks is key to making NodeBB fast.

Both bespoke and commercial frameworks can suffer from bloat for a simple reason – abstraction of logic combined with enabling capabilities to everything for everything2 leads to too much overhead, even when optimized. (e.g. we want to provide the capability for the developer to arbitrarily assign click events to any item on the page, so let’s attach click handlers to everything on the page — this is a contrived example, purely as a form of demonstration. I don’t think any frameworks do this… or at least, I certainly hope not.) The fact that Tree shaking even exists is proof that we have gone too far! We’re treating the symptoms of the disease instead of applying the cure.

This sort of “shotgun approach” to feature enhancement is doing a disservice to developers everywhere. The cost of a little bit of convenience might be small at the micro level, but applied to the entire application, it becomes a great hindrance. The real tragedy is that developers won’t even know they’re introducing many extra CPU cycles to the end user, until they’re deep in the work and have gone too far to reverse course. Think of the boiling frog metaphor – the application slows down more and more over time, but you simply don’t notice because it was only marginally slower than it was yesterday…

How?

We’ve gotten to this point incrementally.

With frameworks, the benefits are immediately obvious. Quick prototyping and developer-friendly interfaces are nice, but the drawbacks come later. The sunk cost fallacy applies, in that by the time we notice what is happening, we’ve gone too far down the hole to stop.

Feature creep in general, contributes greatly. We develop excess functionality without trimming the fat, and we pay for it with an app/website that might do awful lot, but is a pain to use. Perhaps it’s time for us to step back and Marie Kondo our codebases – does this feature bring us joy?

How is NodeBB different?

In our case, a commitment to speed begins right at the start. We often explain to customers that a huge part of NodeBB’s functionality and flexibility comes via our plugin ecosystem. Almost anything can be done via a plugin, and while we bundle some plugins in core, we keep the core as lean as possible so that the out-of-the-box install can showcase NodeBB at its speediest. Of course, adding in additional plugins will slow down NodeBB, but this is where the second key advantage to our plugin system applies – that what one person deems necessary for their forum is superfluous in another. Delivering a kitchen sink is helpful, but comes at a significant cost.

We do not use frameworks, only libraries and helper modules. The downside to this is that we need to spend a little bit of extra time wiring up the various components of the functionality. We don’t have the benefit of a framework to do the boring work for us, although for DRY purposes, we abstract a couple things away (e.g. ajaxify.js – page transitions and history management). On the upside, however, it gives us more time to think critically about the feature, and its design. We’re not merely plugging widgets into sockets, but crafting a unique implementation.

For NodeBB v2, we’re planning to trim the fat (as mentioned above) on a couple parts of core. We wanted to step back and rethink both features and implementation. At the start of all this, we chose Node.js in part due to its speed – let’s not squander this gift.

Web applications aren’t slow by default

Web applications as a whole have a reputation for being sloooow. There’s the expectation that a web application suffers from a chronic lack of speed compared to native applications, and this has been going on for years!

Partially, this reputation is deserved – native applications’ bulk of code is already on disk (as it is installed, and not merely downloaded and run on-demand). Native apps just need to load the data into memory, and RAM is fast! The only upshot is when native application need to fetch content or data from the web; in that case the playing field becomes level.

However, I do not accept this as an excuse. With appropriate modularization and liberal use of the on-disk cache, a web app should be able to approach the speed of a native application.

Fortunately, we can use this to our advantage. We have a pre-existing user expectation that a web site will take 1-2 seconds (if not more) just to become usable. Make this your goal! A hard limit of 1-2 seconds for your Time To Interactive is very much achievable.

A couple takeaways:

- If you are fortunate enough to work on a web application without a framework, you’re already at an advantage speed-wise.

- Never be satisfied with playing second fiddle to native applications. Always strive to level the playing field, by looking into things like Progressive Web Applications3

- Men and women work hard every single day to make the web faster (e.g. faster internet, faster javascript engines). Don’t squander these benefits by sending more data to your end users just because you can.

- Periodically, take a step back and slim down and cut features. Look at functionality with a critical eye and ask whether it is truly needed, and delivers on everything that it promised. Simply maintaining something because of the effort to create it is not in and of itself a justification.

- Appreciate the unintentional gift that users now expect websites to be slow. Buck the trend, and prove them wrong.

1 To do this completely unscientific test, I opened the developer console, disabled the cache, and refreshed the page. One thing to note here is that the numbers don’t tell the full story. In many of the tests, the site had loaded after 4-5 seconds. Most sites were interactable within 5 seconds, and the inflated numbers are purely due to additional calls to pre-fetch data, ads, images/video/media, or trackers. That said, 5 seconds to first click is a tad ridiculous regardless.

2 By that, I mean that many frameworks provide an interface to do lots of Cool Things™ to almost everything on the page, in the interest of providing flexibility. However, to allow for this comes at a great cost, especially considering that in many cases, most of the stuff on your site wouldn’t take advantage of any of the additional features, but take up the overhead just the same.

3 Yes, I realise the irony in that NodeBB itself is not a full PWA, but this is something we definitely want to achieve for v2

Cover Photo Luca Campioni via Unsplash